Metamodelling

Overview

Multivariate metamodelling is a way to make simplified models of mechanistic models that can be run faster and are more interpretable. This opens up a set of possibilities for how to use already existing mechanistic models to optimize processes and improve understanding.

Our Multivariate Metamodelling methods allow our customers to:

- Understand and verify underlying relationships in the mechanistic model

- Speed up mechanistic models to enable online use

- Invert model to estimate internal states and parameters

- Combine metamodel outputs with empirical measurements for a hybrid modelling approach that combines “the best of both worlds” and models the process as it really is, in light of prior knowledge.

- Find neutral parameter sets for optimization of secondary KPIs while keeping main KPI constant

Example applications

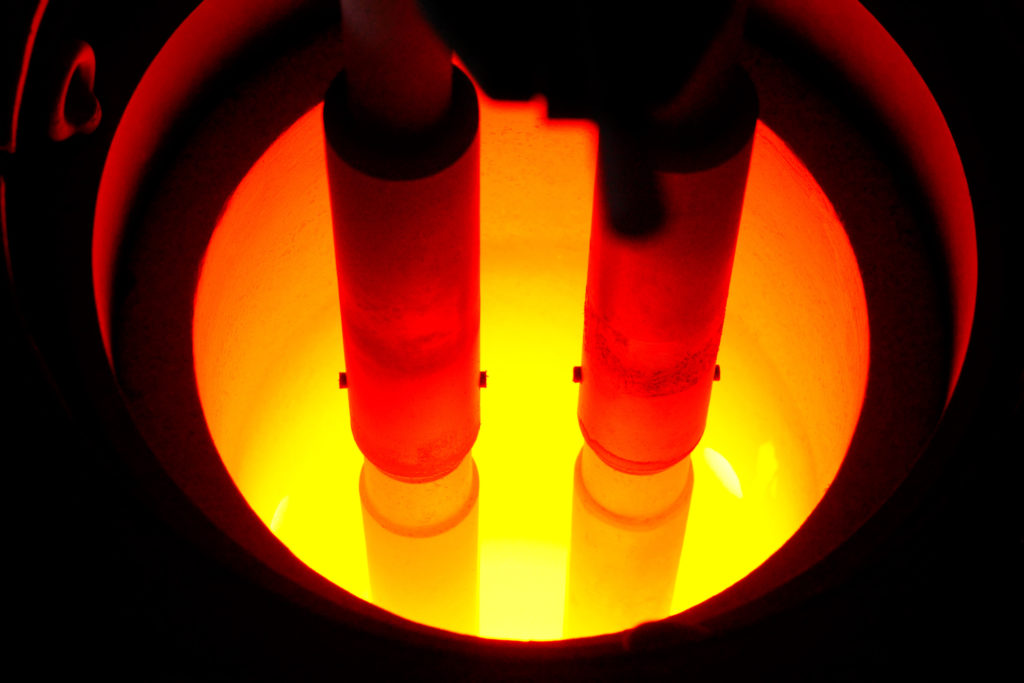

- Simpler real-time use of mechanistic models in metallurgy: Speed-up of FEM of electrical conditions in FeMn furnace

- Faster computations: Speed-up of FEM of human facial expressions

- Faster Covid-19 forecasting: Speed-up of population-wide agent-based model

- Seeing new output patterns: The repertoire of a spatiotemporal PDE pattern generating model of animal skin expand and inspected

- Advanced imaging of cancer cells: Spectral modelling to separate light scattering from light absorbances in synchrotron FTIR microscopy

- Comparison of competing model alternatives: Overlap and uniqueness of two spatiotemporal models of heart muscle elasticity

- Flexible model selection and fast fitting to data: Summarizing 40 different models of growth curves into the «meta-modelome of line curvature»

Mechanistic models

Models describing expected behavior.

Mechanistic, or theory driven models, are widely used to describe behavior of a system based on known theory. These mathematical models could be for furnaces in the metallurgy industry, wind turbines, spread of infectious diseases, or the cardiovascular system. Examples: Finite element models of heat diffusion or electrical fields, differential equations of reaction kinetics, or computational fluid dynamic models of turbulence in gases or liquids.

A good mechanistic model describes essential properties and behaviors, according to the laws of physics within the selected process design. Such a model is a valuable source of prior knowledge, especially about how the system will behave under conditions where you don’t even WANT to have observational data, for example situations that cause harm to equipment or personnel.

Mechanistic models are not perfect, but they represent valuable knowledge.

Mechanistic mathematical models often encapsulate deep prior knowledge of domain experts. However, mechanistic models may be slightly oversimplified – they often do not take all possible interactions between the parameters into account. Still, they do describe valuable insight about how the laws of physics determine the input-output relationships of the system, and how the system was intended to function.

One other issue is that many of the coefficients that are built into the mechanistic/first principle models are estimated from certain experiments that might not have a general applicability, but represent a “best estimate”. This might introduce some bias in the models, so that they cannot be extrapolated uncritically to other similar but not identical applications.

Old models put to rest.

Mechanistic models are often used for design of processes and assets. But today, they are seldom used in the operations phase. One reason is that they are often slow to compute and difficult to fit to streams of process measurements. Another reason is that a model is no longer trusted – maybe there were discrepancies between the planned “design” in the model and what was actually “built”, or perhaps the model has not been updated with later furnace modernizations. Raw materials and operating conditions also change over time, reducing fit with the original mechanistic model.

So, theory-driven mechanistic models might not be perfect. But they can be supplemented by data-driven models based on actual process measurements. This is called “hybrid modelling”, and combine mechanistic- and data driven modelling, using the advantage of each and avoiding the disadvantage of each.

Multivariate Metamodelling

Metamodelling makes hybrid modelling faster and easier.

A multivariate metamodel is a simplified description of the output behavior of a mechanistic model under different input conditions. When building a metamodel, we need to describe the mechanistic model’s behavior. This means running the mechanistic model repeatedly under different conditions, making sure that the relevant range of conditions are included.

The model inputs (various combinations of relevant system design descriptions, parameter values, initial conditions and computational controls), are chosen so that the model outputs will be is representative for the use case of interest as well as for unwanted, but possible deviations from these. This set of computer simulations with the mechanistic model needs only to be done only once.

In Idletechs we do this through our highly efficient experimental designs, to make the most cost-effective computer simulations of parameter combinations, spanning the whole relevant range of behavior with respect to the important statistical properties. Read more in Design of Experiments

The input-output relationship from the simulated data from the mechanistic model are described with the same methods that are used for modelling the relationship between empirical measurements of input and output. These so-called subspace regression methods, extensions of methods originally developed in the field of chemometrics (ref H. Martens & T Næs (1989) Multivariate Calibration. J. Wiley & Sons Ltd. Chichester UK), are fast to compute, give good input-output prediction models and are designed to give users a graphical overview and provide insight.

The laws of physics as implemented in the mechanistic model still apply in the obtained metamodels of the mechanistic model’s behavior. Only now the computations of outputs from inputs can run thousands of times faster, and without risk of local minima or lack of convergence.

Different ways to use multivariate metamodeling.

Multivariate metamodelling can be used for a range of applications:

- Understand and verify underlying relationships in the mechanistic model

- Speed up mechanistic models to enable online use

- Invert model to estimate internal states

- Combine metamodel outputs with empirical measurements for a hybrid modelling approach that combines “the best of both worlds” and models the process as it really is.

- Find so-called “neutral parameter sets” for optimization of secondary KPIs while keeping main KPI constant

Understand and verify underlying relationships

Most mechanistic models define how a system’s outputs depend on its inputs according to theory:

\(Outputs \approx F(Inputs)\)

The metamodel of such a theoretical, mechanistic mathematical model is a simpler statistical approximation model:

\(Outputs \approx f(Inputs)\)

A metamodel in this causal direction is guaranteed to give good descriptions of the mechanistic model’s outputs from its combination of inputs. Such a metamodel gives quantitative predictions and graphical insight into what are the most critical elements and stages hidden inside the mechanistic model itself. The user gets information at three levels:

- THAT the Inputs to a system determines on the Outputs: Fast theoretical predictions of critical properties that are either unobservable but essential, or particularly suited for actual measurements

Example: The known operating conditions of a furnace (e.g. electrode control, input current, raw material charge and cooling rate) predicts the inner, hidden position of the electrode tip, or the outer surface temperature and electromagnetic field.

- HOW the Inputs to a system are combined to predict the Outputs under different operating conditions: Global and Local Sensitivity Analysis.

Example: How certain combinations of operating conditions of the furnace should be able to predict its inner or outer properties.

- WHY different combinations of Inputs affect different combinations of Outputs the way they do: Revealing the most important patterns of covariation in the system.

Example: Why a combination of electrode control, input current and raw material charge seem to affect a combination of electrode tip and outer electromagnetic field, while a different combination of input current and cooling capacity seem to affect the surface temperature distribution.

In other words: The many input/output variations form distinct patterns of causalities that can monitored in real time, in particular if the model is fitted fast enough to relevant measurements.

Speed up models

Many mechanistic mathematical models are highly informative, but too slow for real-time updating. Examples: Nonlinear spatiotemporal dynamics of e.g. metallurgical reactions, or heating and cooling processes. A metamodel of such a slow mechanistic model may be developed to mimic its input/output behavior and make the computations much faster and more understandable.

To establish a metamodel of a mechanistic model requires some computer simulation work (mostly automatic) and some multivariate data analysis (requires our expertise). But this work is done once and for all. Once established, our metamodels run very fast, due to their mathematical form (low-dimensional bilinear subspace regressions).

Moreover, each time the original non-linear model is applied, it may give computational problems, such as local minima and lack-of-convergence. In contrast, its bilinear metamodel do not suffer in this way, for those problems were already dealt with during the metamodel development.

Estimate internal states

Various predicted Outputs predicted from a metamodel, e.g. the surface temperature distribution of an engine, may be compared to actually measured profiles of these Outputs, e.g. continuous thermal video monitoring of that engine. This allows us to infer the unknown causal Inputs – inner states and parameter values like unwanted changes in the inner combustion, heat conductivity or cooling of the engine.

One way to attain this is to search for already computed Output combinations that resemble the measured Output profile, starting with the previous simulation results.

But an even faster approach is also possible. In many cases it may be even more fruitful to invert the modelling direction, compared to the causal direction Output=f(Inputs):

\(Inputs \approx g(Outputs)\)

That can give an exceptionally fast way to predict unknown input conditions and inner process states from real-time output measurements.

In addition, the metamodel will automatically give warning of abnormality if it detects discrepancies between the predicted and measured temperature distribution of the engine surface. This facility is very sensitive, since it ignores patterns of variation that have already been determined to be OK.

Adapt and expand to real world

Combine metamodel outputs with empirical measurements for a hybrid modelling approach that combines “the best of both worlds” and models the process as it really is.

Improving the process knowledge and also the mechanistic model.

It is generally a good idea to bring background knowledge into the interpretation of process measurements. Even though the boundary conditions may have changed, the laws of physics are the same.

Improving your old mechanistic model. The so-called subspace regression models also provide automatic outlier detection if and when new, unexpected process variation patterns are seen to develop in the measurements. These new pattern developments are used for generating warnings to the operators. Moreover, process properties that have changed are revealed and corrected in terms of model parameter settings that appear to change compared to what you expected. Thereby the combined meta-/data-modelling process helps you update the parameter values in your old mechanistic model.

But in addition, your old mechanistic model may not only be updated, but also expanded in this process: Unexpected, new variation patterns in the hybrid can be analyzed more depth, in terms of mechanistic mathematical equations, e.g. differential equation between the inner states, known or known, of your process. These model elements may be grafted onto the original mechanistic model, just like a new branch may be grafted onto the trunk of an old fruit tree. Thereby, your old mechanistic model becomes fully adapted to more modern times.

Optimization

Some mechanistic models display an apparent weakness: Several different combinations of Input conditions can give more or less the same Output profile:

\(F(Inputs_1) \approx F(Inputs_2) \approx … F(Inputs_N) = Outputs\)

This means that the mechanistic model is mathematically ambiguous, in the sense that different input combinations may cause the system to behave in the same way.

Such a collection of parameter combinations with more or less equal effects on the system is called a “neutral parameter set”. And a mechanistic model with neutral parameter sets is called “mathematically sloppy”.

However, in an industrial setting, this apparent weakness may be turned into a great advantage. Assume that this ambiguity is a property of the physical system itself (e.g. a furnace or an engine), and not just due to an error in its mechanistic model.

Assume also that there are multiple key performance indicators (KPIs) for a given system. May be there are ways to optimize one key KPI, KPIKey, without sacrificing the other KPIs, KPIOther?

Then, for a process where a good mechanistic model shows such an ambiguity, its metamodel may list a range of different input combinations that may change KPIKey while not affecting KPIOther.

Seeing this type of ambiguity may allow you to find ways to optimize the Input conditions with respect to KPIKey without affecting the other desired process Output significantly. For instance, by studying the sloppiness of the mechanistic model (its many-inputs-to-one-output, e.g. discovered via its metamodel) you may find new ways to run an engine that reduce CO2 or fuel cost without sacrificing engine power. Or new and less risky ways to control the electrode positioning in a furnace without affecting its productivity.

Idletechs helps you to combine valuable information from both the mechanistic model (via its metamodel) and from modern measurements (e.g. thermal cameras etc), in a way that is understandable for operators and experts alike.

Design of Experiments

One of the essential tools in meta- and hybrid- modelling is Design of Experiments (DoE). Proper use of DoE ensures that the parameter space in the mechanistic and simulation-based models is described with a minimum number of combinations of the parameters of interest, i.e. the best subset of experimental runs. The traditional analysis of results from DoE, ANalysis Of VAriance (ANOVA) yields statistical inference w.r.t the importance of the parameters, and to distinguish real effects from noise. The multivariate subspace models gives detail insight into the relationship between samples and variables from experimental designs, specifically in situations with multiple outputs. Yet another important aspect in this context it that one can a priori estimate the danger of overlooking real effects by means of power estimation. No experiments should be performed before there exists an estimate of the uncertainty in the outputs of the model.

Furthermore, DOE will also clarify possible interaction effects which initially may not have been the considered in the mechanistic models. Derived input and output parameters might be added by so-called feature engineering; adding transforms of the initial parameters based on domain specific knowledge and theory. Temperature is e.g. rarely affecting a process in a linear way. The modern optimal designs also offer the definition of constraints as known in the system under observation, e.g. that some combinations of parameters will be give fatal outcome of the process. After the initial model has been established, the model can be numerically and graphically optimized, which is the basis for one or more confirmation runs for verification.